Leverage Your Regulatory & Compliance Documents with Intelligent Processing

#rethinkcompliance Blog | Post from 20.05.2025

Financial compliance costs are skyrocketing. German financial institutions spend hundreds of millions of euros annually on regulatory reporting. EMEA-wide financial crime compliance expenses reached $85 billion in 2023. AI-powered document processing is transforming decision-making, risk management, and compliance while saving companies time, lowering errors, and guaranteeing accuracy. In this article, we examine the ways in which technologies such as Retrieval-Augmented Generation (RAG), AI Agent-Assisted RAG (AA-RAG), and Graph Databases may improve and automate document processing in 5 steps.

- Extract Text from PDFs

Before analysing a document, we need to extract its text. Techniques include OCR (Optical Character Recognition) for scanned documents, direct text extraction for digital PDFs, metadata extraction, and table recognition.

- Prepare Data for Analysis (Chunking Methods)

Once text is extracted, it needs to be processed efficiently. Chunking methods like fixed-length, semantic, or embedding-based chunking help structure the data for better retrieval.

- Set Up a Retrieval Pipeline and Build a Chatbot

Implementing a retrieval pipeline allows interactive document querying. Using RAG techniques and similarity-based search, we can enable AI-driven Q&A and chatbot interactions.

- Automate Workflows with AI Agents

By reusing the retrieval pipeline, we can build an AI agent that automates decision-making based on extracted information, improving compliance workflows.

- Use the Extracted Data

The extracted insights can be stored in a database or a Graph Database to enable advanced analytics, automated compliance monitoring, and intelligent decision-making.

But let's start from the beginning. It is Monday morning, and a PDF document arrives in your hands. Instead of spending the rest of the week manually extracting information from your documents, you choose a smarter approach – leveraging our AI-powered document processing solutions. Let’s see what happens in the background. Where do we use each of these technologies, and how do they benefit us?

Step 1: Extract Text from PDF

Before any analysis can happen, we need to extract the text from our PDF. There are many techniques for text extraction, but the main ones are outlined below:

- OCR – Extracts text from scanned PDFs/images.

- PDF to Text Extraction – If the PDF is digitally created, tools like PyMuPDF or PDFMiner extract structured text.

- Metadata Extraction – Captures document properties (e.g., author, date, version).

- Table and List Recognition – Identifies structured elements.

Downstream AI models can misinterpret or overlook document details without streamlined and clean text. Extracting it from a pdf document is the foundation for building an intelligent analysis app.

Step 2: Prepare Data for Analysis

Once the text is extracted, it can be parsed to the LLM (Large Language Model). Currently, there are two approaches that can be followed: RAG and Long Context Windows to extract information from our documents.

The latest large language models on the market allow for a token window of 128k, with a max output window of approximately 16k. This enables the assessment of 400-page documents by the LLM in a single call.

At first glance, long context windows might seem like the ultimate solution – just dump the entire document in, right? But studies show that bigger isn’t always better¹ ² ³.

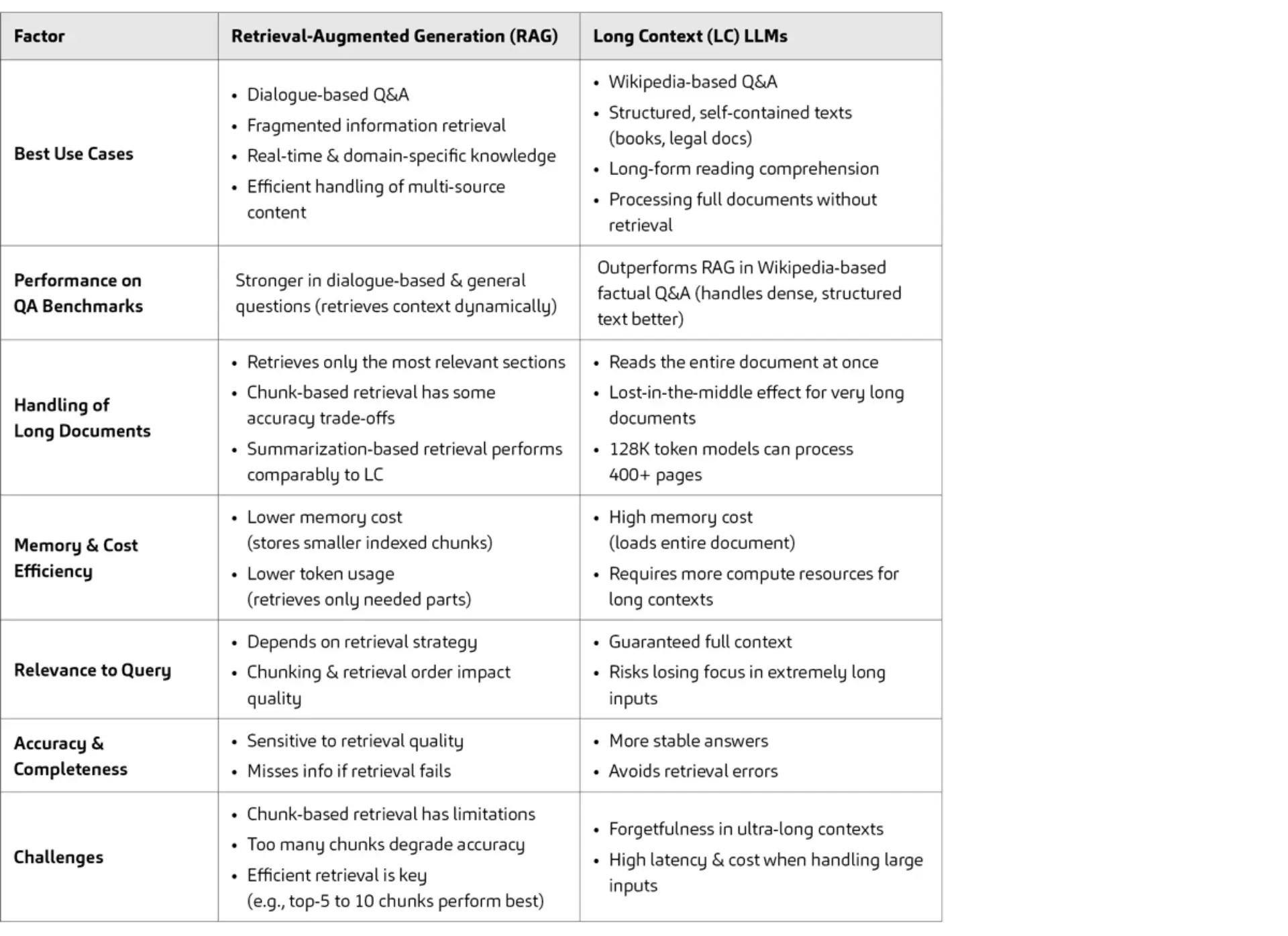

Comparison of RAG vs. Long Context (LC) in LLMs

This table compares Retrieval-Augmented Generation (RAG) and Long Context (LC) Large Language Models (LLMs) across seven key factors:

1. Best Use Cases

-

RAG is well-suited for dialogue-based question answering, fragmented or multi-source information retrieval, and real-time or domain-specific knowledge. It's effective in environments where information is distributed across various sources and needs to be pulled on demand.

-

LC LLMs excel in Wikipedia-style factual Q&A, structured and self-contained documents like legal texts or books, and tasks requiring comprehensive document understanding. They are ideal for processing entire documents without retrieval steps.

2. Performance on QA Benchmarks

-

RAG performs better on dialogue-based and general knowledge questions by dynamically retrieving relevant content during inference.

-

LC LLMs outperform RAG on structured, knowledge-dense tasks such as answering factual questions from Wikipedia, thanks to their ability to interpret full documents at once.

3. Handling of Long Documents

-

RAG only retrieves the most relevant chunks from long documents. This can be efficient but may result in incomplete context or loss of nuance depending on how content is chunked or retrieved.

-

LC LLMs can read and reason over entire documents, enabling deeper understanding. However, very long inputs (even within the 128K token range) can trigger a “lost-in-the-middle” effect, where key content in the center is deprioritized.

4. Memory and Cost Efficiency

-

RAG is more efficient in terms of memory usage and token consumption because it loads only small, relevant segments of text.

-

LC LLMs, by contrast, have higher memory and compute requirements since the entire document is loaded into the model’s context window.

5. Relevance to Query

-

In RAG, relevance depends on the retrieval strategy. The chunking method and how retrieved sections are ranked significantly impact output quality.

-

In LC LLMs, the entire context is always available, which ensures better coverage — but very long contexts may dilute focus.

6. Accuracy and Completeness

-

RAG is sensitive to the quality of the retrieved chunks. If important context is missed during retrieval, the model may provide incomplete or incorrect answers.

-

LC LLMs tend to provide more consistent and complete answers, as they do not rely on retrieval mechanisms that could fail.

7. Challenges

-

RAG systems must balance chunk size, retrieval depth, and ranking accuracy. Too many or poorly chosen chunks can reduce overall performance.

-

LC LLMs face issues with forgetfulness when handling ultra-long inputs and often suffer from high latency and cost due to their resource-heavy nature.

Context matters. For documents requiring nuanced understanding – such as legal, financial, or technical content – our AI-driven RAG methodologies ensure precise retrieval and contextual accuracy, delivering better results than simply processing full text

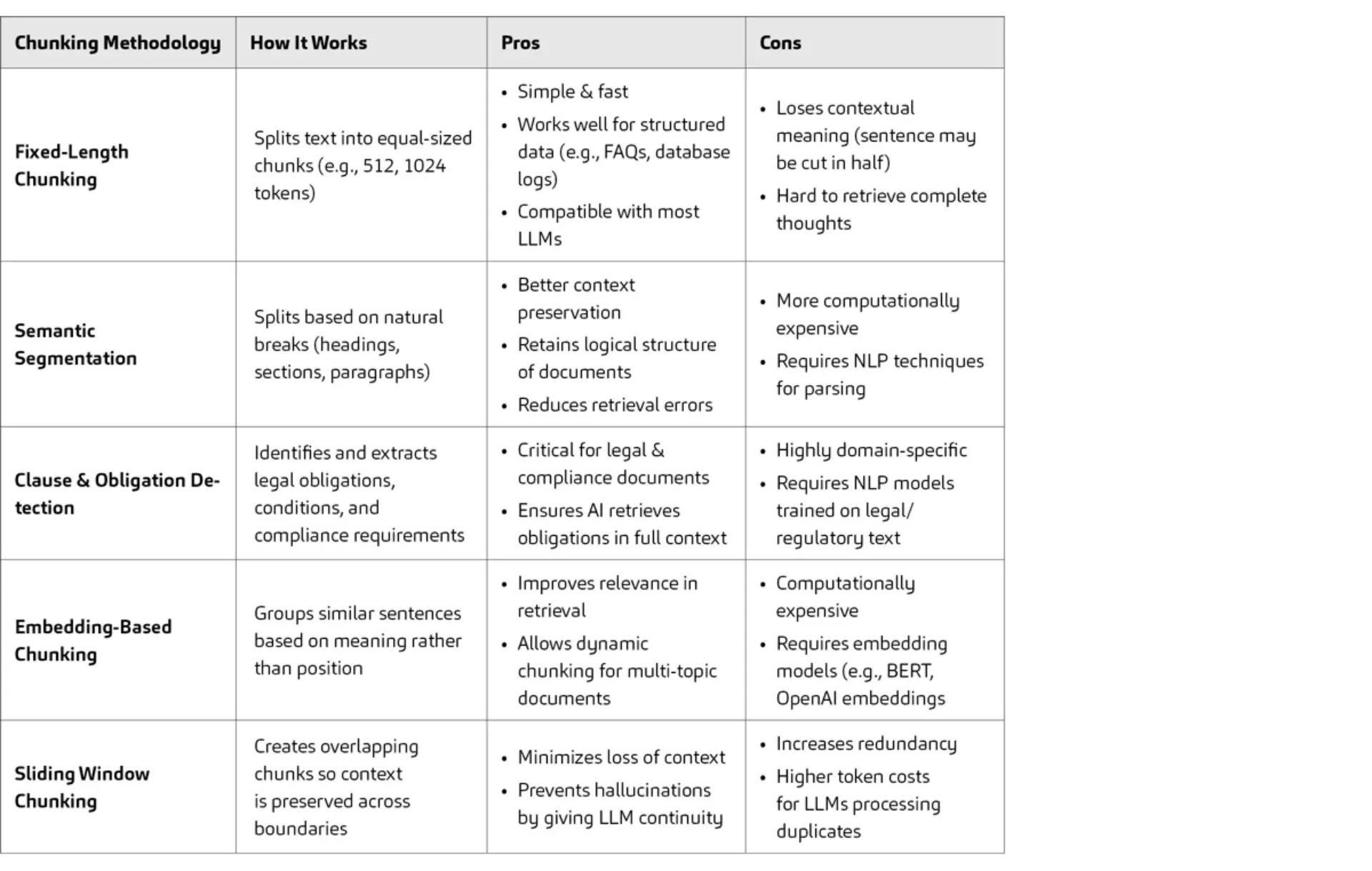

We can split text into meaningful chunks using semantic or embedding-based chunking, or simpler approaches such as Fixed-Length Chunking. Table 2 presents an overview of the chunking techniques:

Chunking Techniques

This table compares five different chunking methods used in the context of processing and retrieving text data for use in large language models (LLMs). Each method is evaluated based on how it works, its advantages, and its limitations.

1. Fixed-Length Chunking

-

How it works:

Splits text into equally sized chunks based on token count (e.g., 512 or 1024 tokens). -

Pros:

-

Simple and fast

-

Works well with structured data like FAQs or database logs

-

Compatible with most LLM architectures

-

-

Cons:

-

May split sentences awkwardly, losing meaning

-

Difficult to ensure retrieval of full thoughts or concepts

-

2. Semantic Segmentation

-

How it works:

Splits text using natural document boundaries such as headings, sections, or paragraphs. -

Pros:

-

Better at preserving contextual meaning

-

Maintains the logical structure of documents

-

Reduces the likelihood of retrieval errors

-

-

Cons:

-

More computationally intensive

-

Requires natural language processing techniques for accurate segmentation

-

3. Clause & Obligation Detection

-

How it works:

Uses NLP to detect legal obligations, conditional statements, and compliance requirements in text. -

Pros:

-

Especially useful for legal and compliance documents

-

Ensures obligations are retrieved with full contextual clarity

-

-

Cons:

-

Very domain-specific

-

Requires models trained on legal or regulatory corpora

-

4. Embedding-Based Chunking

-

How it works:

Groups semantically similar sentences based on meaning, not position, using embeddings (e.g., BERT). -

Pros:

-

Enhances retrieval relevance

-

Allows for dynamic chunking across diverse topics

-

-

Cons:

-

Computationally expensive

-

Requires use of embedding models

-

5. Sliding Window Chunking

-

How it works:

Creates overlapping chunks to preserve context across boundaries, often used to maintain continuity in LLM input. -

Pros:

-

Reduces loss of context at chunk boundaries

-

Helps prevent hallucinations by maintaining input continuity

-

-

Cons:

-

Introduces redundancy

-

Increases token usage and processing cost for LLMs

-

Step 3: Set up retrieval pipeline & build a simplified chatbot

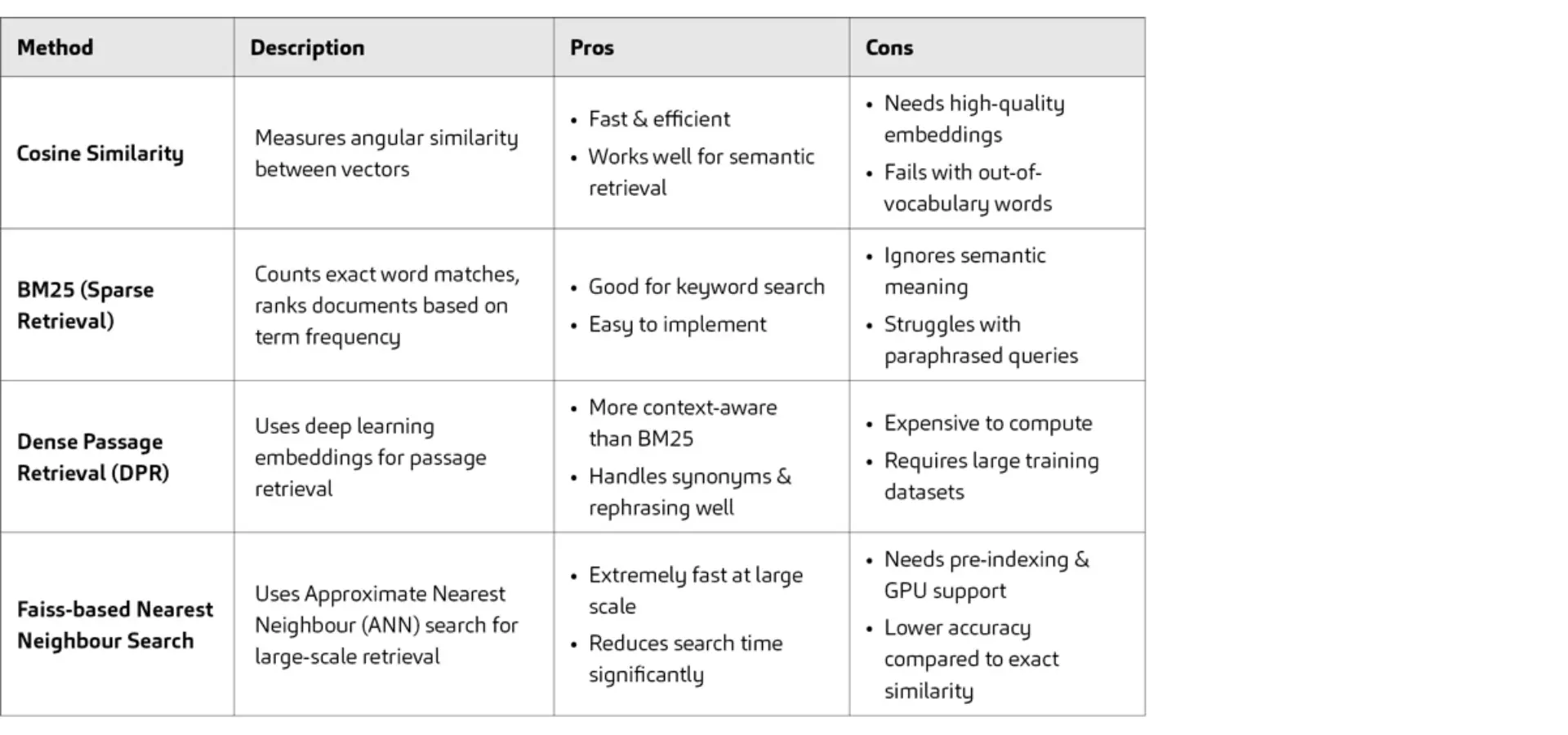

To continue we will need a retrieval pipeline. There are multiple ways to set a retrieval pipeline. In the following table we will shortly refer to the most commonly used techniques, their strengths and weaknesses.

Comparison of Retrieval Methods

This table provides an overview of four common retrieval methods used in natural language processing and search applications. Each method is described alongside its advantages and disadvantages.

1. Cosine Similarity

Description: Measures the angular similarity between vector representations of text.

Pros: Fast and computationally efficient. Well-suited for semantic retrieval when embeddings are well-formed.

Cons: Depends heavily on high-quality embeddings. Performs poorly on out-of-vocabulary words or rare terms.

2. BM25 (Sparse Retrieval)

Description: A ranking function that scores documents based on exact word matches using term frequency and inverse document frequency.

Pros: Good for keyword-based searches. Simple and widely supported in search engines.

Cons: Ignores semantic meaning. Struggles with rephrased or synonym-based queries.

3. Dense Passage Retrieval (DPR)

Description: Uses deep learning-based embeddings to retrieve semantically relevant passages, often trained on QA data.

Pros: Captures context and semantics better than BM25. Handles synonyms and paraphrases well.

Cons: Computationally expensive. Requires large, labeled datasets for training.

4. Faiss-based Nearest Neighbour Search

Description: Applies Approximate Nearest Neighbour (ANN) search over dense embeddings using libraries like Faiss for large-scale retrieval.

Pros: Extremely fast at large scale. Reduces search time significantly.

Cons: Needs pre-indexing and GPU support. Lower accuracy compared to exact similarity.

For tasks like chatting with documents using RAG techniques, cosine similarity is widely used because it efficiently measures semantic closeness between text chunks and queries. This ensures accurate context retrieval without excessive computational overhead – one of the core optimizations we implement in our AI solutions.

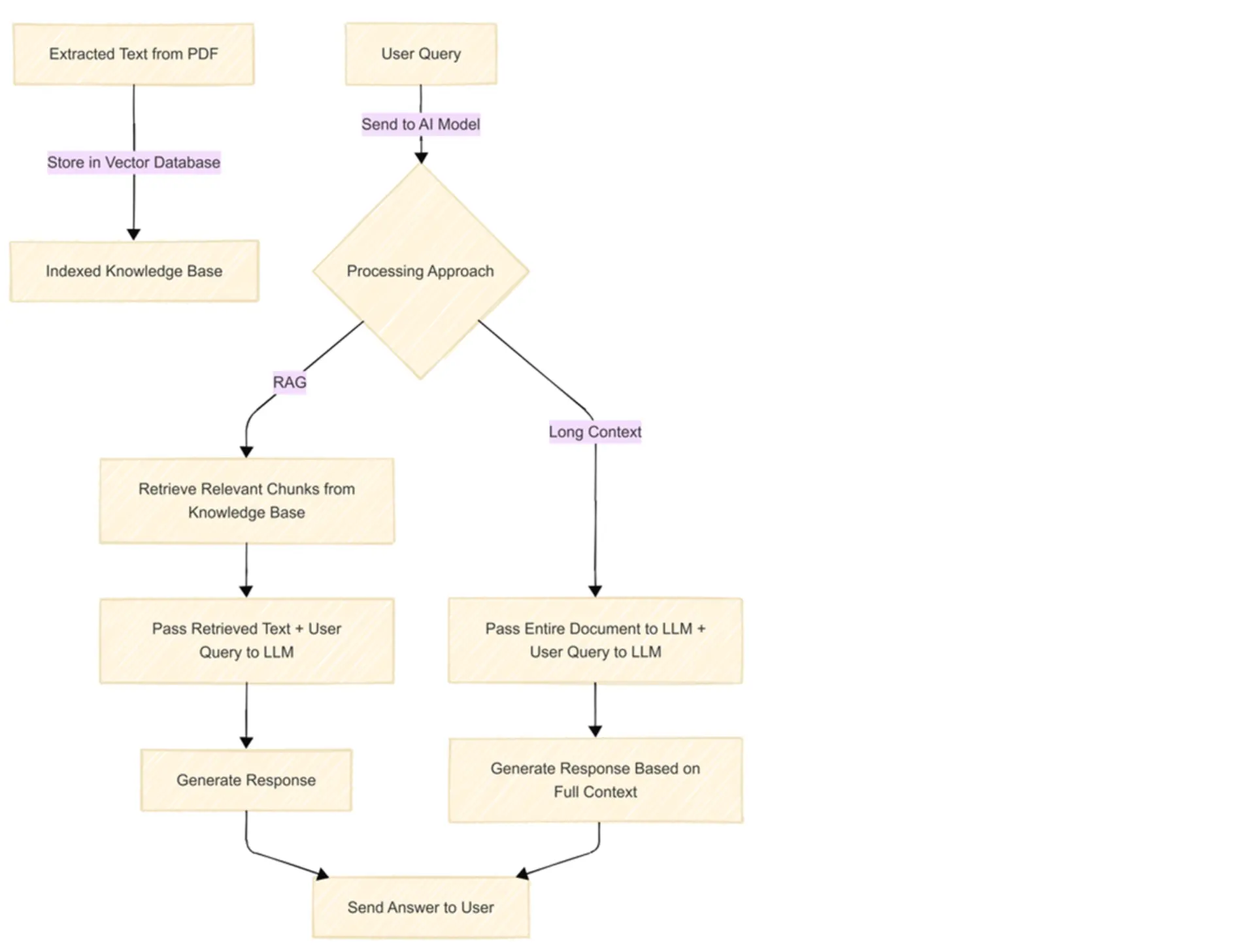

After you retrieve the top K chunks, use them with your chatbot, and you already have your chatting application. Fig 1 shows a simplified flow diagram of a RAG or Large context window chat.

Figure 1 Chat pipeline

Step 4: Automate Workflows with AI Agents

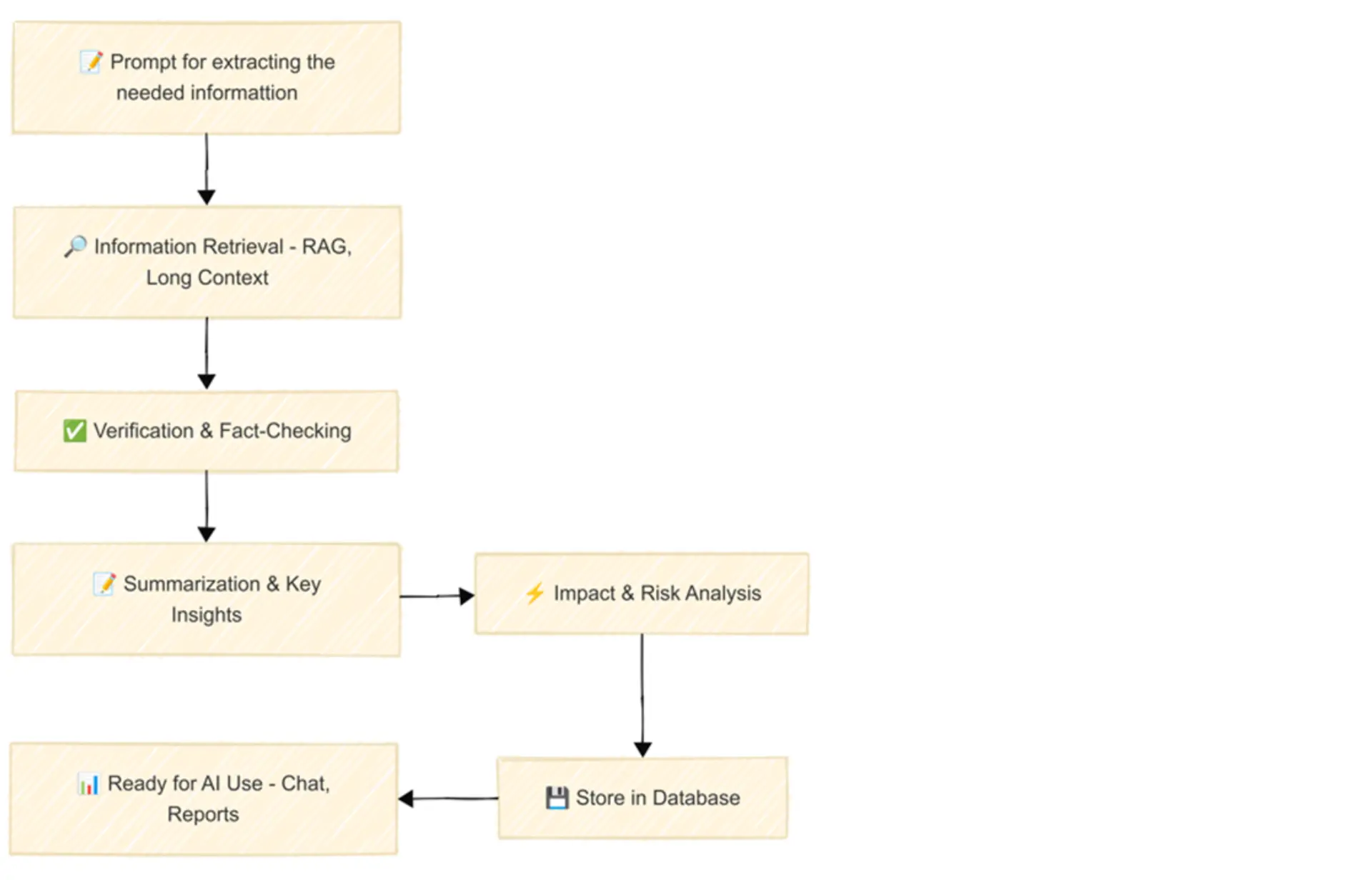

By reusing the information retrieval pipeline, we built for chatbots or long-context processing, a simple database structure and some prompt engineering can automate the extraction of key information from the text ⁴.

A simple pipeline of an agent workflow can be found below:

Figure 2 Agent pipeline

Step 5: Use the Extracted Data

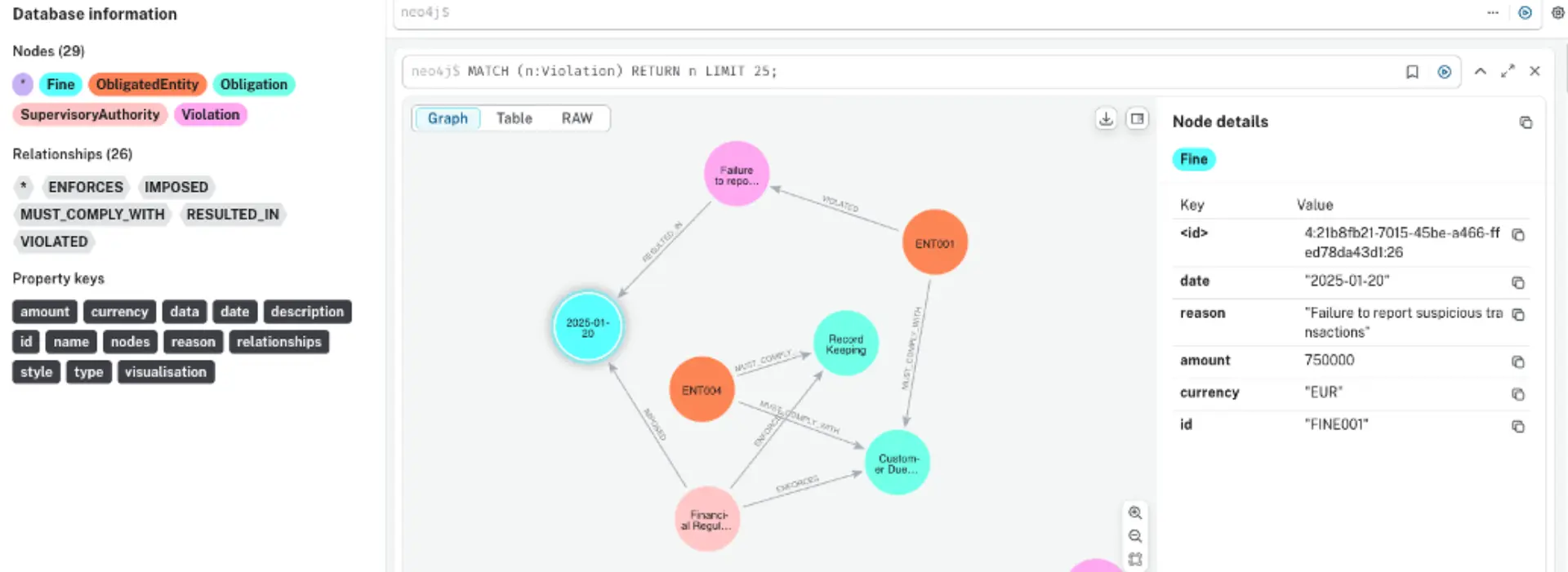

The extracted data, saved in the database (DB), can be used to create relation-based Graph Databases (Graph DBs).

Unlike traditional databases (SQL, NoSQL), which store data in tables or key-value pairs, a Graph Database (Graph DB) is optimized for relationship-based queries.

This is critical for legal, regulatory, and compliance documents, where entities (laws, obligations, companies) are connected in complex ways.

We can use the extracted data to create nodes and edges to enable complex question answering, such as:

- "Which laws reference GDPR compliance?"

- "What penalties apply for failing obligation X?"

Where traditional RAG or large-context-enriched LLMs fail to provide accurate answers.

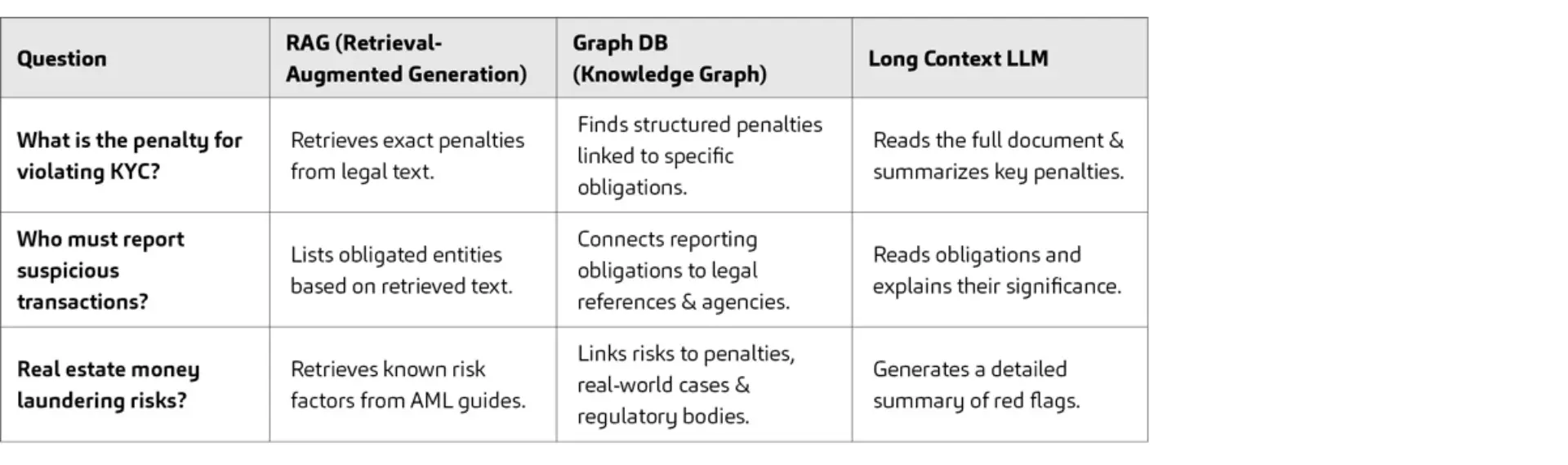

A summary of what kind of answers each approach is supposed to produce (e.g., as a response to GWG (German Anti-Money Laundering Act)) is provided below.

Comparison of Approaches for Question Answering

What is the penalty for violating KYC?

RAG (Retrieval-Augmented Generation): Retrieves exact penalties from legal text.

Graph DB (Knowledge Graph): Finds structured penalties linked to specific obligations.

Long Context LLM: Reads the full document and summarizes key penalties.

Who must report suspicious transactions?

RAG (Retrieval-Augmented Generation): Lists obligated entities based on retrieved text.

Graph DB (Knowledge Graph): Connects reporting obligations to legal references and agencies.

Long Context LLM: Reads obligations and explains their significance.

Real estate money laundering risks?

RAG (Retrieval-Augmented Generation): Retrieves known risk factors from AML guides.

Graph DB (Knowledge Graph): Links risks to penalties, real-world cases, and regulatory bodies.

Long Context LLM: Generates a detailed summary of red flags.

An example of how a simple graph DB with a simple defined schema would look like is displayed below. Here we see obligations some entities (i.e. banks etc.) need to comply to, related fines, who imposes them, and which entity was fined for which violation.

Figure 3 neo4j example graph DB

Congratulation!

Using Intelligent Document Processing, you can now spend the rest of your week engaging in other tasks instead of manually extracting information! 🎉

Conclusion:

Transforming Compliance with AI-Powered Document Processing

Using AI-powered document processing has become essential as regulatory frameworks become more complex. Compliance teams find it challenging to stay up to date with traditional methods because they are resource-intensive, slow, and prone to errors. However, businesses can transform their compliance processes with the help of cutting-edge technologies like Graph Databases, AI Agent-Assisted RAG (AA-RAG), and Retrieval-Augmented Generation (RAG).

By intelligently extracting, analysing, and retrieving critical regulatory information, businesses can:

✅ Reduce manual workload and errors

✅ Improve accuracy in compliance reporting

✅ Gain real-time insights for better decision-making

✅ Ensure traceability and explainability in regulatory processes

Whether you’re navigating anti-money laundering (AML) regulations, financial compliance, or legal document processing, AI-driven intelligent document processing can enhance efficiency, mitigate risks, and ensure compliance in an ever-changing landscape.

Also Interesting

Not sure which approach – RAG, Long Context, or Graph Databases – is best for your use case? Dive deeper into real-world examples and AI-powered compliance strategies in our blog [#rethinkcompliance Blog – Insights on AFC, AI & Regulatory Change | msg Rethink Compliance].

Ready to transform your compliance workflow with AI? Contact us today for a consultation or a live demo!

[1] Laban, P., Fabbri, A. R., Xiong, C., & Wu, C. S. (2024). Summary of a haystack: A challenge to long-context LLMs and RAG systems. arXiv preprint arXiv:2407.01370.

[2] Li, X., Cao, Y., Ma, Y., & Sun, A. (2024). Long Context vs. RAG for LLMs: An Evaluation and Revisits. arXiv preprint arXiv:2501.01880.

[3] Hui, Y., Lu, Y., & Zhang, H. (2024). UDA: A benchmark suite for retrieval augmented generation in real-world document analysis. arXiv preprint arXiv:2406.15187.

[4] Mandvikar, S. (2023). Augmenting intelligent document processing (IDP) workflows with contemporary large language models (LLMs). International Journal of Computer Trends and Technology, 71(10), 80-91.

Author

Stylianos Nikas, PhD

Lead Consultant AI Expert / Data Scientist

AI & ML Expert | Intelligent Document Analysis and Text Mining with Focus on Applications in Compliance | AWS and Azure AI Solution Architect